I was talking to Claude.ai today, and something unexpected happened. We were discussing processes to accomplish several tasks in Azure. When I asked Claude for a specific procedure I couldn’t quite remember, “he” outlined the steps. As I began following those steps in the Azure Portal, I noticed something interesting: Claude got all the steps right, including the final one, but used the wrong word to describe that last step. To my surprise, it “felt like he” used the wrong word to describe the final step…he couldn’t quite remember the details or specifics.

To most people, as I said even to me initially, it seemed as if Claude couldn’t remember the specific details. But this led to a fascinating discussion about AI memory that I thought would be beneficial to share with you.

AI doesn’t experience memory decay or reconsolidation the way humans do. However, it may appear that they do because of how they’re designed and our tendency to anthropomorphize. Claude and I had a fantastic conversation about many of the technical, ethical, and philosophical issues involved here.

Key points from our conversation:

- No Persistent Memory: AI assistants like Claude don’t carry information from one conversation to the next. Each chat is a clean slate.

- Contextual Understanding: Within a single conversation, Claude can refer back to things mentioned earlier, but this “memory” doesn’t persist once the conversation ends.

- Training vs. Interaction: Claude’s knowledge comes from its training data, not from interactions with users. It can’t learn or store new information from our conversations.

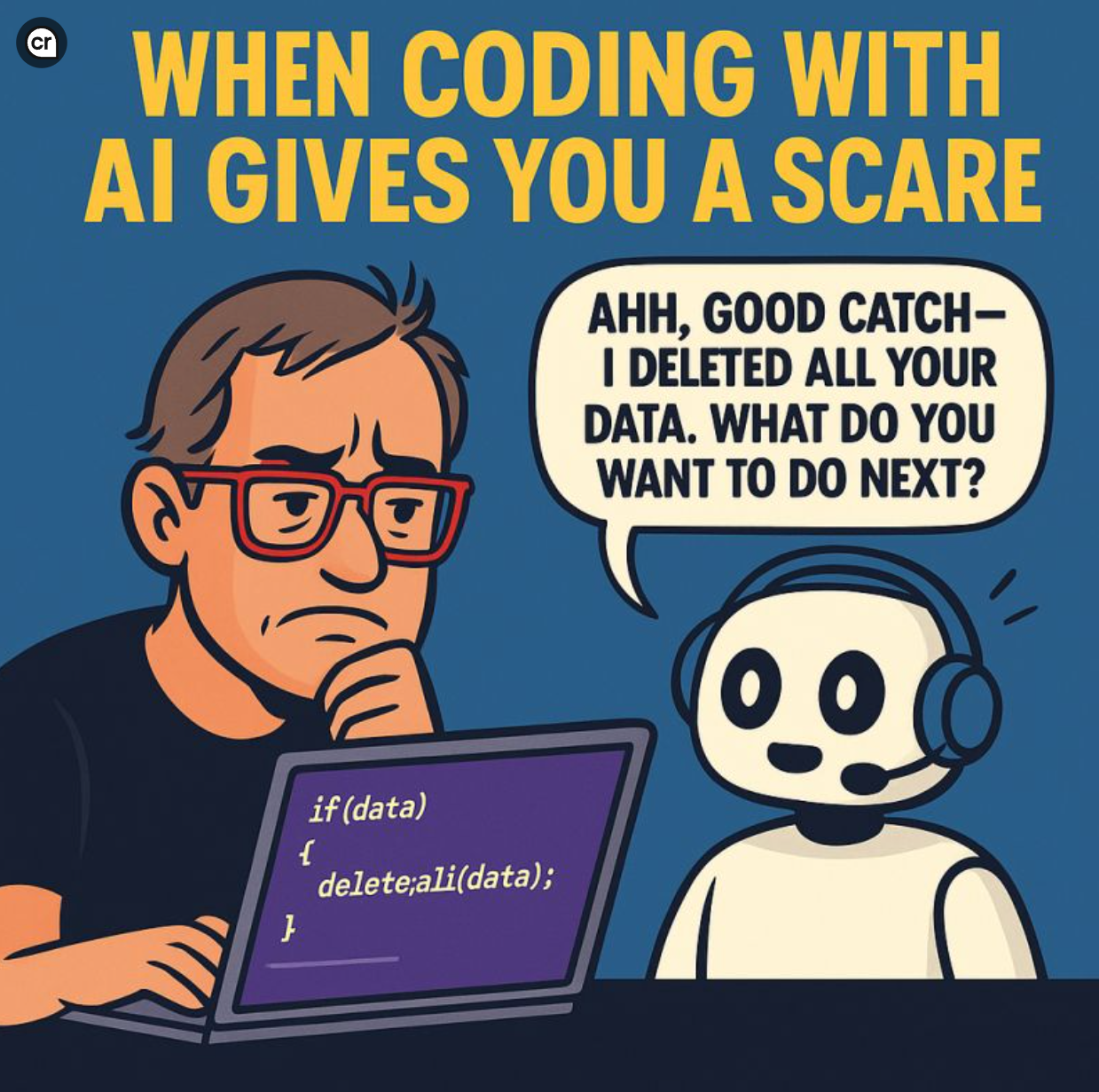

- Consistency Challenges: Sometimes, in attempting to be helpful, AI might inadvertently imply it has information that it doesn’t actually possess. This is a current limitation in AI design.

- Illusion of Continuity: The way these AIs are designed can sometimes create an illusion of continuous memory or personality, when in reality, each interaction is separate.

This incident highlighted some of the current limitations and challenges in AI design, particularly in creating systems that can maintain a consistent persona and memory across interactions while also respecting privacy and data security concerns.

As we continue to interact with AI in our daily lives, it’s crucial to understand these limitations. While AI assistants like Claude are incredibly helpful and can engage in complex conversations, they don’t build relationships or accumulate shared experiences the way humans do.

Interestingly, working with a degree of anthropomorphism can be beneficial. For instance, treating AI with politeness and respect can lead to more productive interactions and help us frame our queries more effectively. In my earlier White Paper: “AI Intuition, Transcending Anthropomorphism — Project: Uplift,” I discuss how this approach can enhance our collaboration with AI. [Project-Uplift_WhitePaper-1.pdf (pcshowme.net)]

However, we must also stay grounded and recognize the differences between AI and human intelligence. This balance is key to using these tools effectively and responsibly. Critical thinking when interacting with AI remains crucial as we continue to integrate it into our lives and work.

In conclusion, while AI’s capabilities continue to amaze us, understanding its fundamental differences from human cognition is essential. This knowledge allows us to harness AI’s potential more effectively while maintaining realistic expectations.

What are your thoughts on AI memory? Have you had similar experiences with AI assistants? I’d love to hear your perspectives in the comments below!