WOW! A lot going on in the AI realm these days and a lot of drama as well. There is a ton of subtext and so many other factors to be considered but I believe at the core the main take away here is that… according to what I’ve heard (which is not confirmed at this point) the new Q* appears to be using Process Reward Models (PRMs) to score Tree of Thoughts reasoning data that is then optimized with Offline Reinforcement Learning (RL)… It does sound like the next logical step at this point. Thoughts?

AI realm change!

Related Posts

-

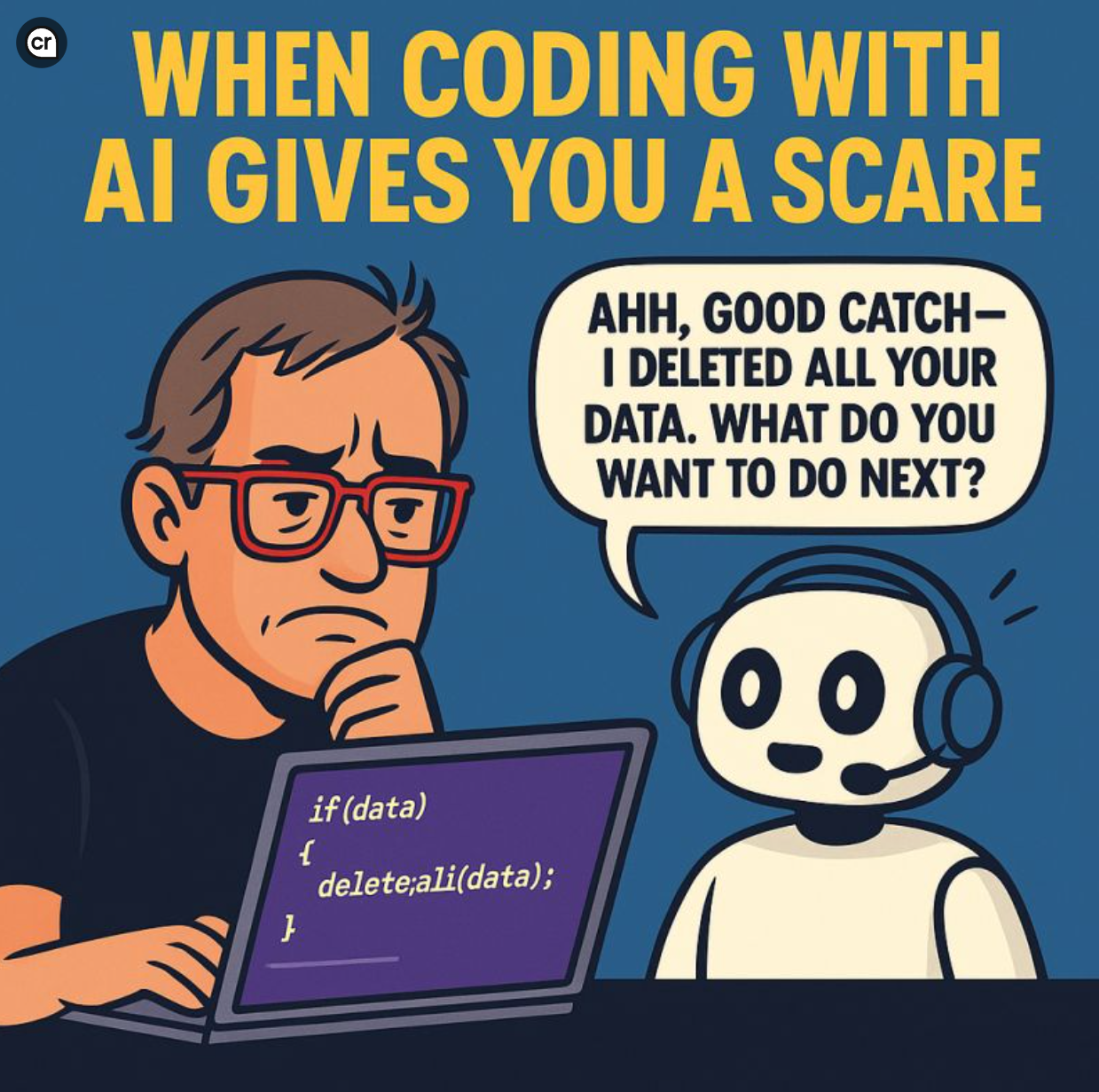

AI coding is like watching a toddler with root access…

READ MORE →: AI coding is like watching a toddler with root access… -

🌍 The Power in Kindness – A Movement Wrapped in Music

READ MORE →: 🌍 The Power in Kindness – A Movement Wrapped in Music -

✨ Better Together – A Simple Reminder ✨

READ MORE →: ✨ Better Together – A Simple Reminder ✨